Language detection (or identification) is a fascinating branch of Natural Language Processing. Its goal is to create a model that is able to detect the language a text is written in.

Data Scientists usually employ neural network models to accomplish such a goal. In this article, I show how to create a simple language detection model in Python using a Naive Bayes model.

The problem

The problem we are going to face is to create a model that, once fed with a text, is able to detect its language. The text can be a sentence, a word, a more complicated text, and so on. The output variable can be, for example, the language code (like “en” for English).

A good idea would be to have a model that detects the language of a text even if this text contains words that the model has not seen in the training phase. We want a model that can generalize the underlying structure of a language in a way that makes it detect it properly.

Let’s see how to create such a model.

The data

I’m going to train this model to detect three languages: Italian, English and German. For the Italian language, I’m going to train the model using the text of a short horror tale of mine, L’isola del male. For the English language, I’ll use the English translation of this tale, The island of evil. For the German language, I’ll use the text of Also sprach Zarathustra of Friedrich Nietzsche found here: http://www.nietzschesource.org/#eKGWB/Za-I. I’ll split these documents into sentences and the final dataset of sentences will be split into training and test sets.

Vectorization using char bigrams

So, we are talking about a classification problem with a 3-valued class, that are: it, en, de. This is the target variable.

Now, let’s talk about the features. The features I’m going to use are char bigrams, that are sets of 2 consecutive characters in a sentence. Consider, for example, the word “planet”. In this case, the char bigrams are “pl”, “la”, “an”, “ne”, “et”.

Why should we use char bigrams? Because it will reduce dimensionality. The Latin alphabet is made of 26 letters. Adding the 10 digits and some other particular character or symbol, we reach roughly 50 distinct symbols. The highest number of char bigrams we can have is then 50*50 = 2500.

If we used the classical word-driven vectorization, we’ll have a higher dimensionality, because each language may have hundreds of thousands of words and we may need to vectorize them all, creating a vector of thousands of features and suffering the curse of dimensionality. Using the char bigrams we get a maximum of 2500 components and they are shared among all the languages using the Latin alphabet. It’s pretty impressive because we can use this corpus as a general feature set for every language using such an alphabet. Moreover, we are not bound with a pre-defined corpus of words, so our model will work even with words it has never been trained on, as long as they are made by the char bigrams of the original training corpus.

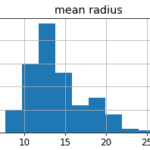

In our particular case, the complete word vocabulary of the three documents is made by 7062 distinct words. If we work with chars char bigrams, we get 842 features. We have reduced the dimensionality of our problem almost by 90%!

So, these are the features. For each sentence, we calculate the char bigrams and count how many times a particular bigram appears in the sentence. This number will fill the corresponding vector component representing the sentence.

The model

The model I’m going to use is a Multinomial Naive Bayes. It’s a very simple model and Naive Bayes is, generally speaking, very powerful when it comes to talking about Natural Language Processing. It almost has no hyperparameters, so we can focus on the pre-processing phase, which is the most critical.

According to scikit-learn docs, Multinomial Naive Bayes can take count vectors as input features, which is exactly what we need.

The code

Let’s import some libraries:

The corpus we are going to work with is made by 3 text files. We have to clean the text, vectorize it, and then we can train the model.

Data preprocessing and vectorization

First, we must clean our text in order to split it into single sentences. Let’s write a function that takes a text file and removes double spaces, quotes, and useless punctuation, returning a list of sentences.

Each document is stored in a separate txt file. We can load the sentences of the three documents, create an array that contains all the sentences, and another one that contains the language related to each sentence.

The complete corpus size is 4195 sentences. Here follows an example:

As you can see, each sentence is correctly related to its language.

The distribution of the languages across the corpus is quite uniform, so there are no unbalanced classes.

We can now split our dataset into training and test sets and start working with the models.

First, we have to call the CountVectorizer object of sklearn in order to create, for each char bigram, the number of its occurrences in each sentence. Then we can create the pipeline that vectorizes our data and gives it to the model, which is a Multinomial Naive Bayes.

We can now fit our pipeline and calculate predictions on the test set:

We can finally take a look at the confusion matrix:

It’s quite diagonal. The classification error seems to be very low.

Let’s see the classification report:

As you can see, we reach an overall accuracy of 97%. It’s pretty impressive if we consider that we are working with only three documents and a dataset of fewer than 5.000 records.

Let’s stress the model

Now, let’s stress our model a bit.

Here follows a set of sentences in the three different languages. The “Comment” column is a comment of mine that explains some features of the sentence, including its real language. The “Detected language” column is the ISO code of the language predicted by the model.

As we can see, the model is pretty good even with mixed-language sentences. There still is some error (for example, “hyperparameters” is wrongly detected as Italian), but the results seem robust and nice.

Even the word “Harrier”, which appears both in the Italian and in the English text has correctly been recognized as an English word.

A simple API

I’ve embedded the model in a pickle file and created a simple API using Flask, deploying it on Heroku. It’s a development API useful for testing purposes. I kindly encourage you to try it and give me your feedback.

The endpoint is: https://gianlucamalato.herokuapp.com/text/language/detect/

The request must be a JSON document with the ‘text’ key, whose value is the text to process. The method is POST.

The response is a JSON document containing the original text in the ‘text’ field and the detected language code in the ‘language’ field.

Here’s an example of how to call my API:

I’ve created another GET method that shows the model modification date and the supported languages:

Conclusions

In this article, I have shown how to create a simple language detection model using Naive Bayes. The power of the model relies, as usual, on the input features, and using the char bigrams seems to have been a good idea.

If you like this model, please stress it using my API and give me your feedback.